Using an 8 channel EEG BCI for human emotion recognition and IOS device control

Spring 2021

This was the final project for the Brain Computer Interface (BCI) class that I took in Spring 2021 (ESC 527) in connection with the Gluckman Lab which provided the tools for ElectroEncephaloGram (EEG) recordings.

The goal of the project was to realize any BCI using EEG recording.

I decided to program a BCI capable of navigating remotely through a social media feed on any IOS device, only based on which emotions were generated in the user.

![]()

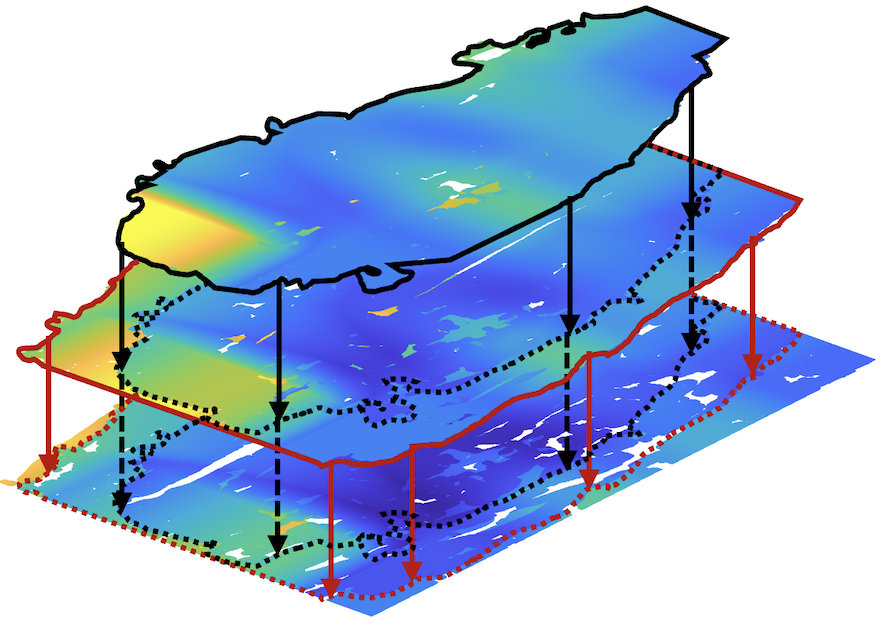

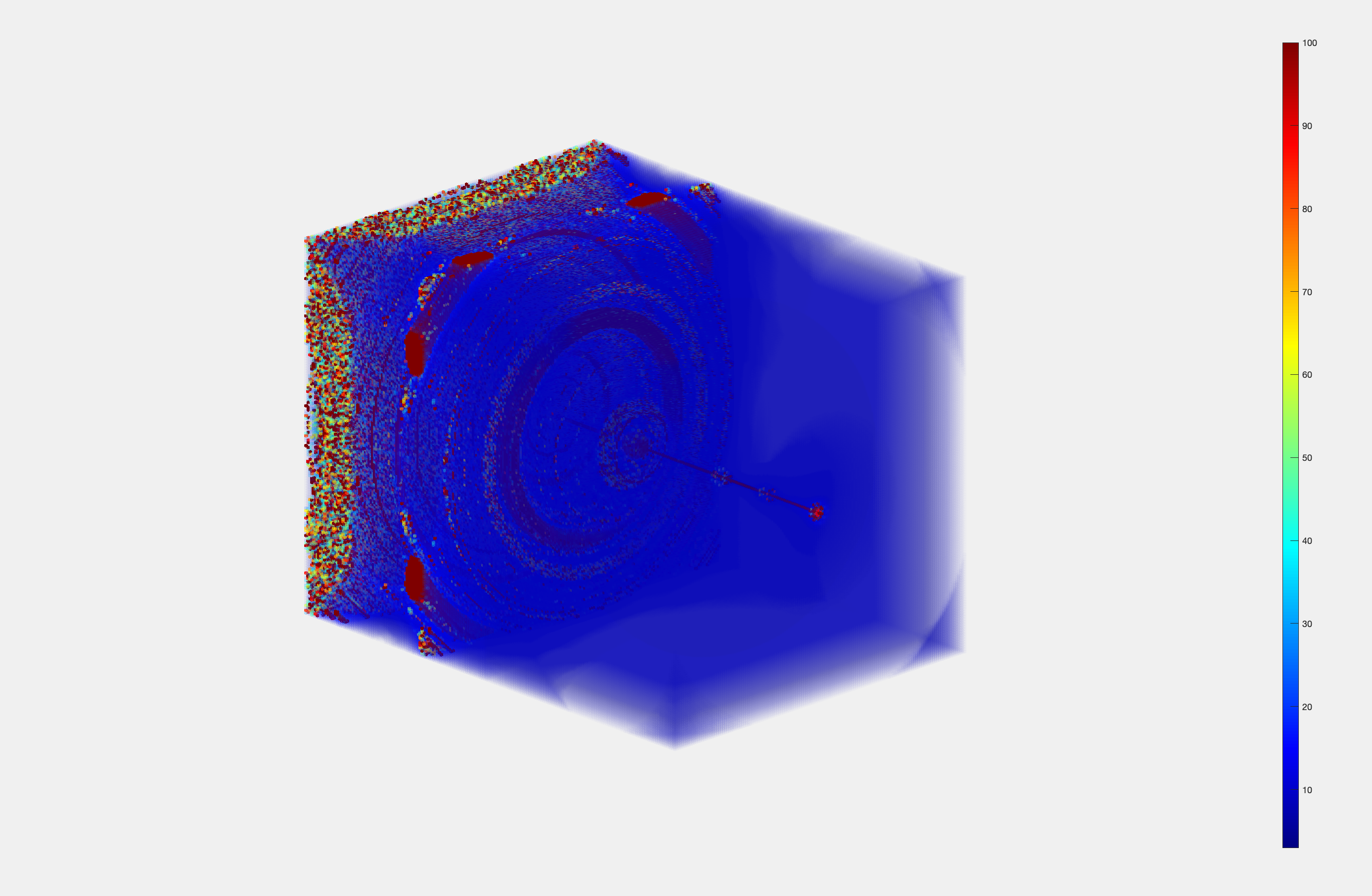

The whole process uses a similar program to the one used SensoryMotor Rhythms, which bases itself on moving the cursor on the screen based on movement imagery in motor related cortical regions.

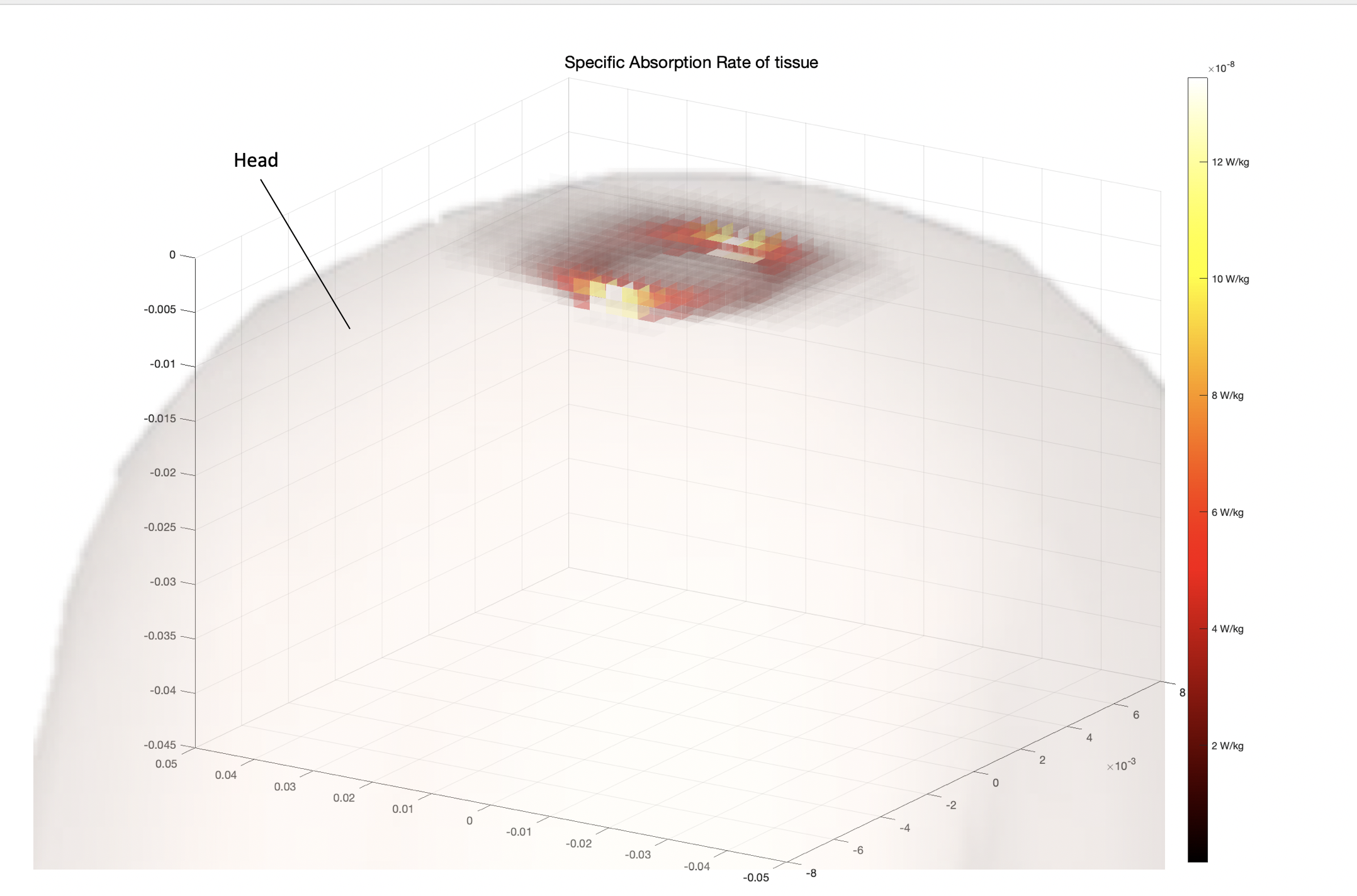

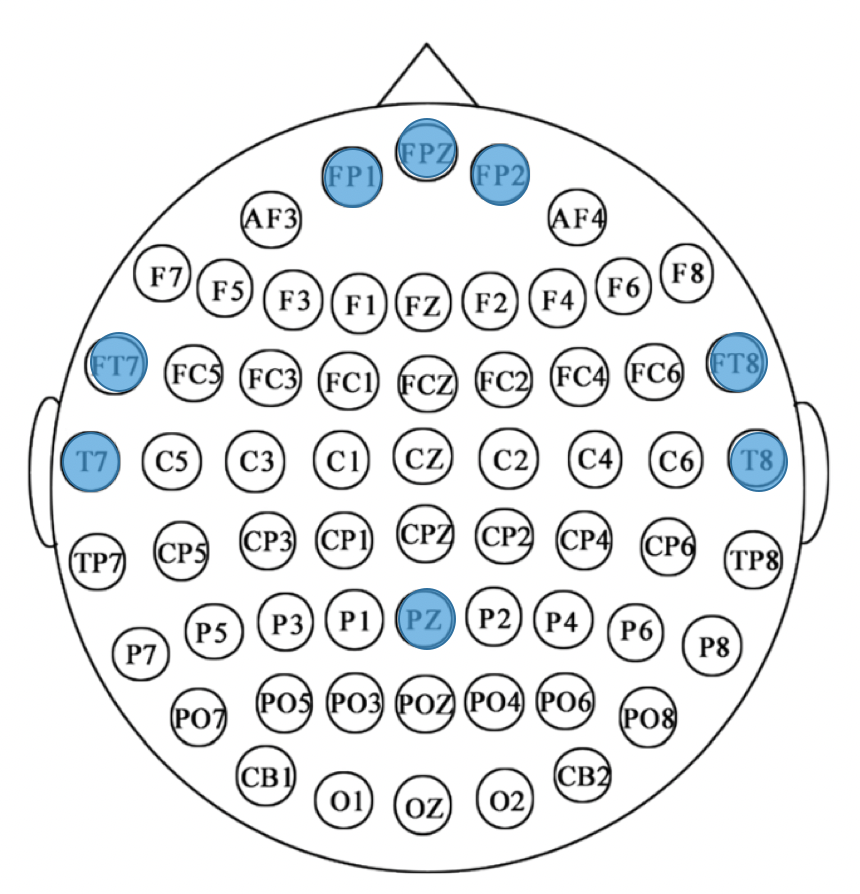

Similarly, I used imagery of emotions recorded from regions responsible for emotional response with high alpha and beta rhythms, to identify in real time, emotions evoked from external stimuli. For reference:

![]()

![]()

![]()

The goal of the project was to realize any BCI using EEG recording.

I decided to program a BCI capable of navigating remotely through a social media feed on any IOS device, only based on which emotions were generated in the user.

Design

The whole process uses a similar program to the one used SensoryMotor Rhythms, which bases itself on moving the cursor on the screen based on movement imagery in motor related cortical regions.

Similarly, I used imagery of emotions recorded from regions responsible for emotional response with high alpha and beta rhythms, to identify in real time, emotions evoked from external stimuli. For reference:

- Brain Alpha rhythms reflect attention processing

- Brain Beta rhythms reflect emotional and cognitive processing

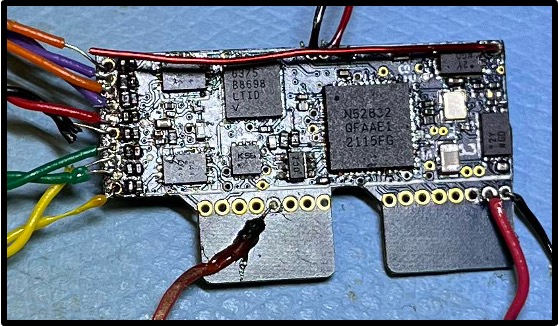

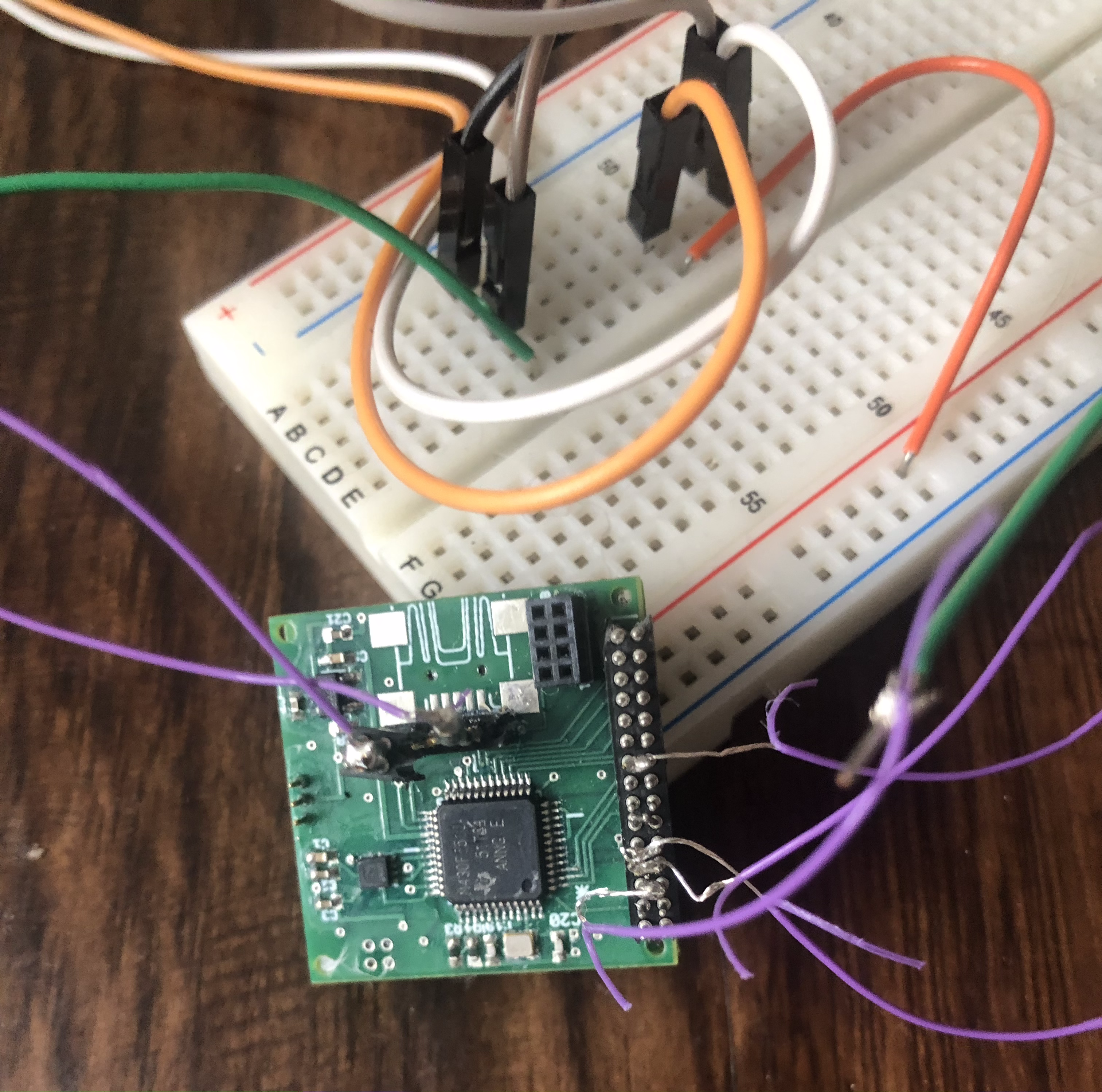

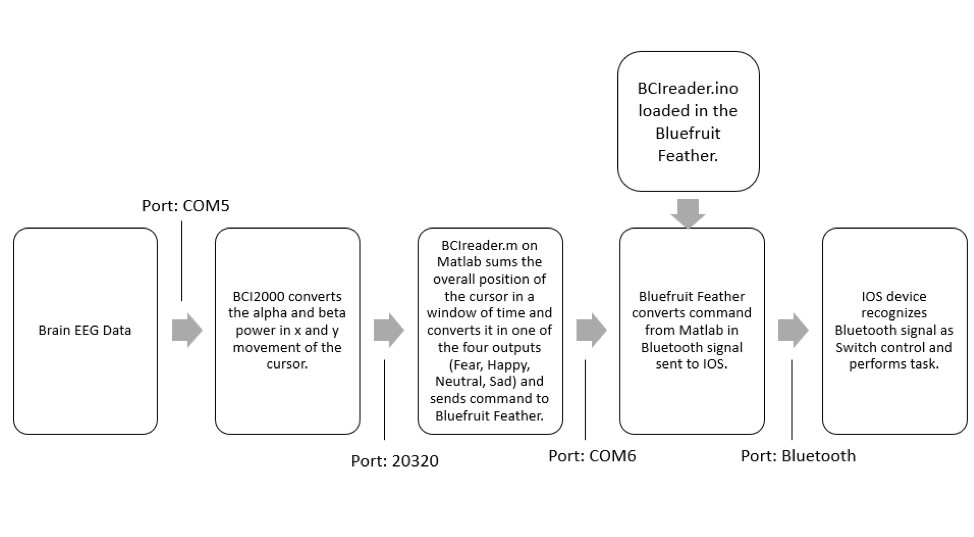

Once emotions are clearly classified, it is now possible through the real time exporting of data of BCI2000, to convert it in 4 distinct responses that are sent from the Computer to a connected microcontroller (Bluefruit Feather) which converts the signal in Bluetooth signal, readable from any IOS device.

A paired IOS device will associate the bluetooth inputs as switch controls and responds accordingly by performing a preset task (in my case, double tap if it’s a positive emotion, otherwise swipe).

All together the system revealed to be 82.86% accurate. Below is a sample run using TikTok as platform where I give a feedback by raising (like) or lowering(dislike) thumb before the BCI determines my emotion and consequently the input on the social:

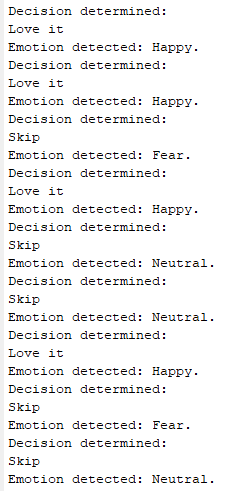

This is the output from the MATLAB program for the video shown above:

![]()

+ This is a very versatile system that has a lot of potential and shows how BCI can interface with everyday items.

- The system is bulky and not mobile

- Artifacts might be present in the recordings done, due to the selected recording sites being very close to major muscles on face.

A paired IOS device will associate the bluetooth inputs as switch controls and responds accordingly by performing a preset task (in my case, double tap if it’s a positive emotion, otherwise swipe).

All together the system revealed to be 82.86% accurate. Below is a sample run using TikTok as platform where I give a feedback by raising (like) or lowering(dislike) thumb before the BCI determines my emotion and consequently the input on the social:

Advantages and Disadvantages

+ This is a very versatile system that has a lot of potential and shows how BCI can interface with everyday items.

- The system is bulky and not mobile

- Artifacts might be present in the recordings done, due to the selected recording sites being very close to major muscles on face.